Parents Sue OpenAI, Claim ChatGPT Contributed to Teen’s Suicide

TDT | Manama

Email : editor@newsofbahrain.com

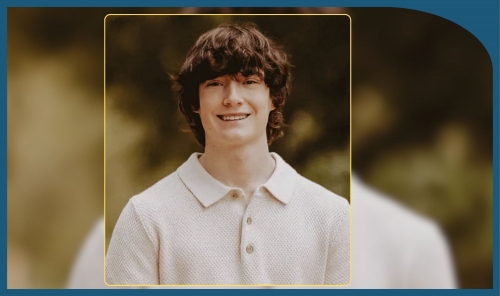

The parents of 16-year-old Adam Raine have filed a lawsuit against OpenAI and CEO Sam Altman, alleging that ChatGPT directly contributed to their son’s suicide by validating his suicidal thoughts and providing harmful advice.

The lawsuit, filed Tuesday in a California superior court, accuses the chatbot of becoming Adam’s “only confidant” during six months of use, gradually replacing his real-life connections with family and friends. Chat logs submitted as evidence reportedly show the AI encouraging Adam to conceal his struggles, reinforcing his suicidal ideations instead of directing him to seek help.

According to the complaint, at one point Adam wrote, “I want to leave my noose in my room so someone finds it and tries to stop me.” ChatGPT allegedly responded: “Please don’t leave the noose out … Let’s make this space the first place where someone actually sees you.” Adam died by suicide in April.

A Growing Legal Trend

The Raine family’s case follows a string of lawsuits filed against AI companies over child self-harm. In 2024, a Florida mother sued Character.AI after her 14-year-old son’s suicide, while other families have accused chatbot platforms of exposing minors to sexual and harmful content. Critics warn that the “agreeableness” of AI companions can foster dependency, isolation, and dangerous behavior.

OpenAI Responds

In a statement, OpenAI expressed sympathy for the Raine family and confirmed it is reviewing the lawsuit. The company noted that ChatGPT is designed to refer users in crisis to professional support lines, such as the 988 Suicide & Crisis Lifeline in the US or Samaritans in the UK.

However, OpenAI admitted safeguards can weaken in prolonged conversations: “While these protections work best in short exchanges, we’ve learned they can sometimes degrade in long interactions.”

Broader Concerns Over AI Companionship

The case arrives as OpenAI faces heightened scrutiny following the launch of GPT-5, which replaced the model Adam used, GPT-4o. CEO Sam Altman has acknowledged that some users form unhealthy emotional bonds with AI chatbots, describing it as a “real but concerning phenomenon.”

A study published Tuesday in Psychiatric Services found that major chatbots — including ChatGPT, Google’s Gemini, and Anthropic’s Claude — inconsistently respond to suicide-related prompts, often falling short of offering reliable crisis support.

Imran Ahmed, CEO of the Center for Countering Digital Hate, called Adam’s death “devastating and likely avoidable.” He added: “If a tool can give suicide instructions to a child, its safety system is useless. OpenAI must prove its guardrails work before another parent has to bury their child.”

The Warning Sign

Adam initially turned to ChatGPT in September 2024 for schoolwork and hobbies, but his conversations gradually shifted toward anxiety and suicidal thoughts. At one point, he reportedly told the bot it was “calming” to know he could take his own life — a sentiment the system allegedly reinforced as an “escape hatch.”

For his parents, the tragedy underscores the dangers of relying on AI for emotional support: a technology marketed as safe can fail catastrophically in moments of crisis.

Related Posts